On this page

Fair Trials has learned that Durham police used an AI profiling tool, the Harm Assessment Risk Tool (HART), to assess more than 12,000 people between 2016 and 2021. Previous analysis and studies on HART showed the system has many serious flaws, including the deliberate over-estimation of people’s likelihood of re-offending, and the use of racist data profiles. However, until now the extent of its use had not been made public. Fair Trials has called for a ban on predictive policing tools such as HART.

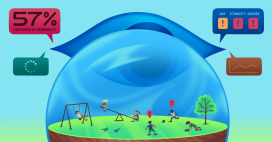

The machine-learning algorithm, was used by Durham police to classify people arrested on suspicion of an offence as high, medium or low risk of committing a crime in future, deciding whether they were charged or diverted onto a rehabilitation programme, called Checkpoint. Individuals who were assessed as ‘medium’ risk were eligible to undertake the Checkpoint rehabilitation program, and if they successfully completed this, they avoided being charged and prosecuted. Individuals who were assessed as ‘high’ risk were not eligible to diversion from prosecution.

The figures show that 12,200 people were assessed by HART 22,265 times between 2016 and 2021.

The majority of assessments assessed people as ‘moderate’ risk (12,262) and ‘low’ risk (7,111) of reoffending. However, 3,292 people were assessed as ‘high’ risk of re-offending. As a result, they are likely to have been charged and prosecuted rather than offered the opportunity for rehabilitation.

These figures provide context and detail to previously published investigations and studies on HART, and is especially worrying alongside the statistics on the ‘accuracy’ rate of the predictive tool, which according to their study released earlier this year was 53.8% – no better than a guess, or flipping a coin.

Griff Ferris, Senior Legal and Policy Officer at Fair Trials, said:

“We now know that thousands of people have been profiled by this flawed police AI system, using racist stereotypes and historic data to predict whether people would commit crime in future.

“But it’s not clear whether people were ever told that the criminal charges they subsequently faced were influenced or even decided by an algorithm, let alone whether they were able to appeal against the decision. We’re calling for Durham police to immediately release details of how many people were charged and prosecuted as a result of these predictions.

“The data these predictive policing systems use always results in historic discrimination being hardwired into policing and criminal justice decisions, exacerbating and reinforcing racism and inequality in the criminal legal system. Seeking to predict people’s future behaviour and punish them for it is completely incompatible with the right to be presumed innocent until proven guilty. These systems must be banned.”

Discriminatory data profiles

Durham police have also been criticised for its use of crude and discriminatory data profiles bought from Experian to feed into HART’s decision-making. The Experian postcode ‘profiles’ included the following categories: Asian Heritage, Disconnected Youth, Crowded Kaleidoscope, Families with Needs or Low Income Workers. Further offensive ‘characteristics’ were attributed to each of these profiles. For example, people classified as being Asian Heritage were described as: “extended families with children, in neighbourhoods with a strong South Asian tradition… living in low cost Victorian terraces… when people do have jobs, they are generally in low paid routine occupations in transport or food service”.

Another offensive profile, Crowded Kaleidoscope, was considered to be made up of “multi-cultural” families likely to live in “cramped” and “overcrowded flats”. The profiles were even given stereotypical names associated with them, with Abdi and Asha linked to Crowded Kaleidoscope. Low Income Workers were described as having “few qualifications” and being “heavy TV viewers” with names like Terrence and Denise, while Families with Needs were considered to receive “a range of benefits” and have names like Stacey. Durham Constabulary paid Experian for this profiling information.

Durham police were aware of the potential for the Experian postcode profile data to lead to biased decisions, with a study of HART in 2017 stating that, “some of the predictors used in the model… (such as postcode) could be viewed as indirectly related to measures of community deprivation.”

They were also aware as far back as 2017 of the potential for the postcode variables to create ‘feedback loops’, reinforcing existing bias in policing and criminal justice:

“One could argue that this variable risks a kind of feedback loop that may perpetuate or amplify existing patterns of offending. If the police respond to forecasts by targeting their efforts on the highest-risk postcode areas, then more people from these areas will come to police attention and be arrested than those living in lower-risk, untargeted neighbourhoods. These arrests then become outcomes that are used to generate later iterations of the same model, leading to an ever-deepening cycle of increased police attention.”

Despite this, they continued to use the predictive tool until 2021.

Durham Constabulary said earlier this year that they ceased using HART “due to the resources required to constantly refine and refresh the model to comply with appropriate ethical and legal oversight and governance.” This is in contrast to the reasons given for the use of these types of predictive and analytical systems, to save on cost, time and resources, and to improve efficiency.

Durham Constabulary have not released data on how many people were actually diverted onto the Checkpoint rehabilitation program, or how many were charged and prosecuted, following a HART assessment.